Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Advancements in artificial intelligence (AI) have led to the creation of deepfakes, which are increasingly difficult to distinguish from real photos. However, a recent study has shed light on a potential method to identify deepfakes by focusing on the eyes and analyzing their reflections.

Researchers presented their findings at the Royal Astronomical Society’s National Astronomy Meeting in Hull, England. They drew inspiration from the field of astronomy, where astronomers use a “Gini coefficient” to measure how light is spread across images of galaxies. This measure helps categorize galaxies based on their shape.

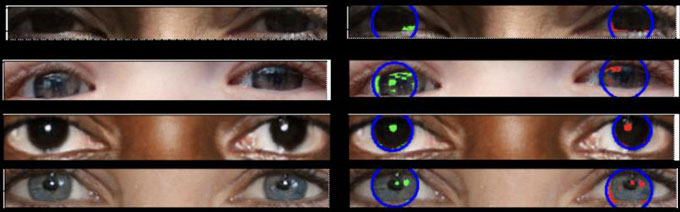

Applying this concept to photos, the researchers developed a computer program to detect eye reflections in pictures of people. By analyzing the pixel values in these reflections, they calculated the Gini index for each eye. The Gini coefficient measures the distribution of light across pixels in the reflection.

In real images, the reflections in both eyes align, with the same number of windows or ceiling lights being reflected. However, in deepfake images, this consistency is often absent. The reflections in AI-generated pictures frequently do not match, indicating a discrepancy in the physics of the image.

According to Kevin Pimbblet, an astronomer at the University of Hull involved in the research, the difference in the Gini coefficient between the left and right eye can serve as a red flag for identifying deepfakes. The study found that for approximately 70% of the analyzed deepfake images, the difference in the Gini index was significantly greater than that of real images.

While this technique is not foolproof, it provides a valuable tool for further investigation. Pimbblet emphasizes that a significant difference in the Gini index does not definitively indicate fakery, but it signals the need for closer human examination.

It is important to note that this method has its limitations. Factors such as blinking or proximity to a light source can affect the accuracy of the analysis. Additionally, the technique may be more challenging to apply to videos.

Nevertheless, the study’s findings offer a promising avenue for detecting deepfakes. By focusing on the eyes and leveraging the Gini coefficient, researchers have identified a potential indicator of artificial manipulation in images. As AI continues to evolve, it is crucial to develop effective methods to identify and combat the spread of deepfakes.

Stay tuned for the next part of this series, where we will explore the effects of utilizing eye reflections to spot deepfakes.

The study’s findings regarding the analysis of eye reflections in deepfake images have significant implications for the detection and mitigation of AI-generated manipulations. By leveraging the Gini coefficient and focusing on inconsistencies in eye reflections, researchers have identified a potential tool to combat the spread of deepfakes.

One of the immediate effects of this research is the advancement in deepfake detection techniques. Traditional methods of identifying deepfakes often rely on analyzing facial features or inconsistencies in pixel patterns. However, these methods can be easily deceived by sophisticated AI algorithms. The analysis of eye reflections provides a new avenue for detection that is less susceptible to manipulation.

By examining the Gini index difference between the left and right eye reflections, experts can flag images that exhibit significant discrepancies. This serves as an initial screening process, allowing human experts to further investigate potentially manipulated content. While the technique is not foolproof, it adds an additional layer of scrutiny to identify potential deepfakes.

Another effect of this research is the potential to raise awareness among the general public about the existence and prevalence of deepfakes. As deepfake technology becomes more accessible and sophisticated, the risk of misinformation and manipulation increases. By highlighting the specific characteristics that can be used to identify deepfakes, individuals can become more discerning consumers of digital content.

Furthermore, the development of this technique may encourage the integration of eye reflection analysis into digital platforms and social media networks. As the technology evolves, it could be incorporated as an automated tool to flag potentially manipulated images and videos. This would provide an additional layer of protection against the spread of deepfakes on a larger scale.

However, it is important to consider the potential limitations and challenges associated with this technique. The analysis of eye reflections may not be applicable in all scenarios, such as instances where individuals are blinking or positioned in close proximity to a light source. Ongoing research and refinement of the methodology will be necessary to address these limitations and improve the accuracy of deepfake detection.

In conclusion, the analysis of eye reflections and the utilization of the Gini coefficient offer a promising approach to identify deepfakes. This research provides a valuable contribution to the ongoing efforts to combat the harmful effects of AI-generated manipulations. As technology continues to evolve, it is crucial to develop robust and effective methods to detect and mitigate the spread of deepfakes, safeguarding the integrity of digital content and promoting trust in online platforms.

If you’re wondering where the article came from!

#