Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Nvidia has announced the release of ‘Chat with RTX,’ a personal AI chatbot designed for Windows PCs. This new chatbot allows users to enhance security and privacy by maintaining their data locally while adding custom context to queries. The demo app, Chat with RTX, is currently available for free download, enabling users to personalize the chatbot with their own content and data sources.

Chat with RTX, running locally on Windows RTX PCs and workstations, enables users to keep their data on their devices while obtaining quick results. Unlike cloud-based language model services, this chatbot allows users to process sensitive data locally without the need to share it with third parties or rely on internet connectivity.

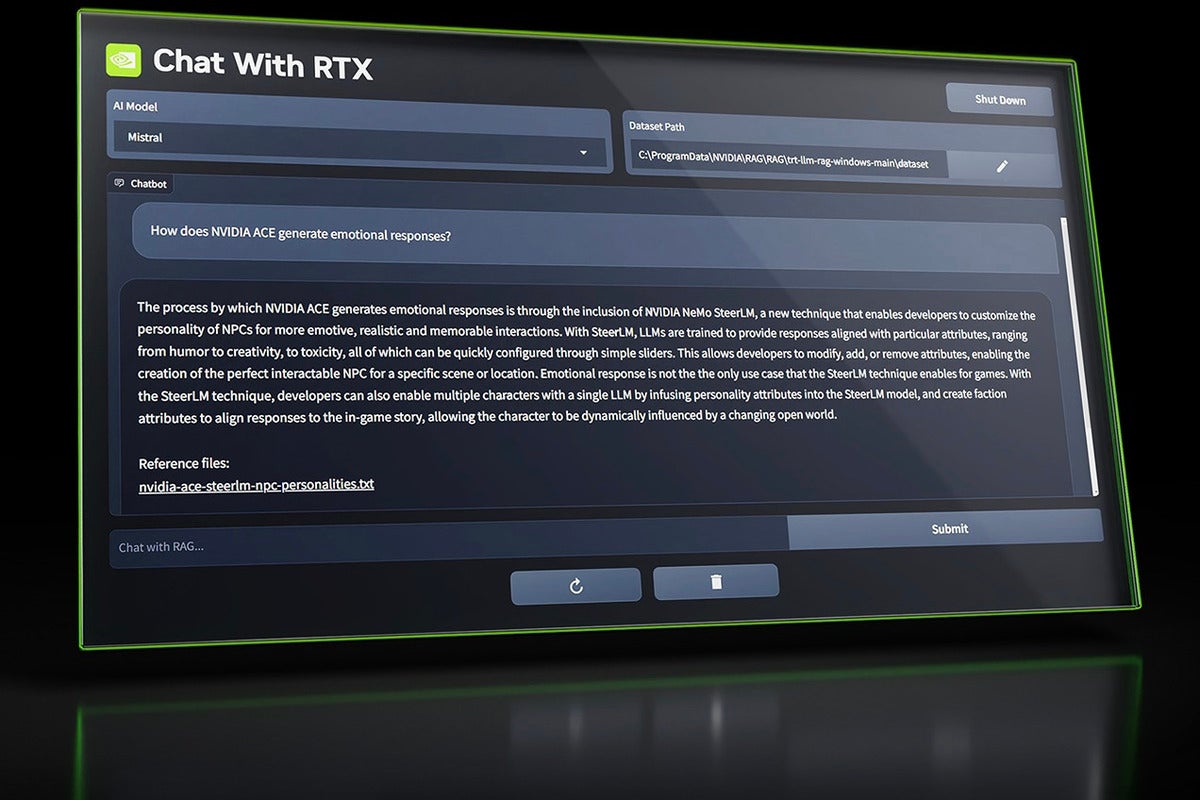

Chat with RTX offers users the option to choose between two open-source language models, Mistral or LAMA 2. To run the chatbot, a minimum of 8GB of video RAM and an Nvidia GeForce RTX 30 series GPU or higher are required. The chatbot is compatible with Windows 10 or 11 and can be customized to meet individual preferences.

The chatbot simplifies the search process by allowing users to input queries instead of manually searching through stored content. It supports various file formats, including text, pdf, doc/docx, and xml. Users also have the option to add data from relevant folders to the chatbot’s library.

Developers can enhance their capabilities in the AI chatbot field by utilizing the TensorRT-LLM RAG developer reference project available on GitHub. This provides them with the opportunity to customize and innovate in the AI chatbot domain.

Nvidia’s Chat with RTX aligns with their mission to popularize AI and make it accessible across multiple platforms. By offering a personalized chatbot that operates locally, Nvidia aims to increase worker productivity while minimizing privacy concerns. The chatbot ensures conversations are kept private within the user’s local environment, reducing the risk of sensitive information exposure.

As the adoption of genAI-based chatbots increases, so do security and privacy concerns. Nvidia addresses these concerns by limiting data and responses to the user’s local environment. This significantly reduces the risk of data leakage and unauthorized access to sensitive information.

It is recommended for companies to maintain ongoing supervision of the data inputted into language models by employees. While policies regarding responsible use of generative AI can be communicated, continuous monitoring is crucial to prevent the input of sensitive company data.

Nvidia’s efforts to make AI accessible across various platforms, from cloud to server and edge computing, solidify its position as a hardware and software provider. Chat with RTX exemplifies these efforts by providing users with the convenience and powerful performance of AI in a local environment.

Overall, Nvidia’s ‘Chat with RTX’ presents a promising solution for a personalized AI chatbot that combines security, privacy, and improved user productivity. It offers users the ability to enhance their data privacy while obtaining fast and customized results, all within their local environment.

Source: Computerworld

If you’re wondering where the article came from!

#