Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

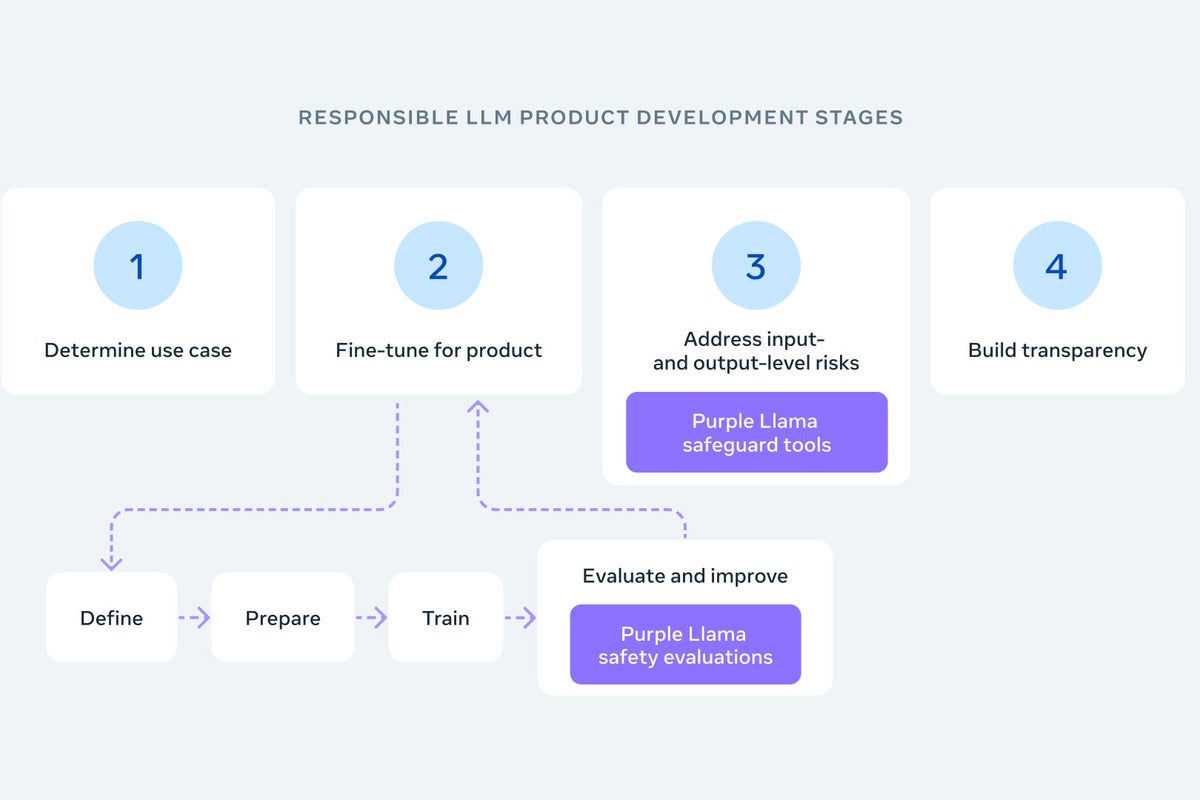

Meta, a leading technology company, has recently made a significant move towards enhancing the safety and trustworthiness of generative AI models. The company has introduced Purple Llama, an open-source project dedicated to creating tools that evaluate and boost the safety of these AI models before they are used publicly. This development comes at a time when concerns are mounting about the potential risks associated with large language models and other AI technologies.

Meta emphasizes the need for collaborative efforts in addressing the challenges of AI safety. The company firmly believes that these challenges cannot be tackled in isolation. In a blog post, Meta stated that the goal of Purple Llama is to establish a shared foundation for developing safer generative AI models. By involving various stakeholders and fostering collaboration, Meta aims to create a center of mass for open trust and safety in the AI community.

The introduction of Purple Llama has been met with positive feedback from experts in the field. Gareth Lindahl-Wise, Chief Information Security Officer at the cybersecurity firm Ontinue, views Purple Llama as a positive and proactive step towards safer AI. Lindahl-Wise acknowledges that while some may question the motives behind this project, the overall benefit of improved consumer-level protection cannot be denied. He further emphasizes that entities with stringent obligations will still need to follow robust evaluations, but Purple Llama can contribute to reigning in potential risks in the AI ecosystem.

Meta’s Purple Llama project involves partnerships with various key players in the technology industry. Collaborations with AI developers, cloud services like AWS and Google Cloud, semiconductor companies such as Intel, AMD, and Nvidia, and software firms including Microsoft aim to produce comprehensive tools for both research and commercial use. These tools will enable developers to test the capabilities of their AI models and identify potential safety risks.

The first set of tools released through Purple Llama includes CyberSecEval and Llama Guard. CyberSecEval is designed to assess cybersecurity risks in AI-generated software. It features a language model that can identify inappropriate or harmful text, including discussions of violence or illegal activities. Developers can utilize CyberSecEval to test if their AI models are prone to creating insecure code or aiding cyberattacks. Meta’s research has found that large language models often suggest vulnerable code, highlighting the importance of continuous testing and improvement for AI security.

Llama Guard, another tool released through Purple Llama, is a large language model trained to identify potentially harmful or offensive language. Developers can use Llama Guard to test if their models produce or accept unsafe content, helping to filter out prompts that might lead to inappropriate outputs. These tools provide developers with the means to evaluate and enhance the safety of their AI models, contributing to the overall goal of creating more trustworthy and responsible AI systems.

Meta’s introduction of open-source tools for AI safety through Purple Llama marks a significant step towards addressing the concerns surrounding generative AI models. By fostering collaboration, Meta aims to establish a shared foundation for developing safer AI and creating a center of mass for open trust and safety. The partnerships with industry leaders and the release of comprehensive evaluation tools demonstrate Meta’s commitment to enhancing AI safety. As the AI landscape continues to evolve, these tools will play a crucial role in ensuring the responsible and secure development of AI technologies.

The introduction of Meta’s Purple Llama project and its open-source tools for AI safety is expected to have a significant impact on the development and deployment of generative AI models. These tools aim to enhance the trustworthiness and safety of AI systems, addressing concerns about potential risks and ethical implications. The effect of Meta’s initiative can be observed in several key areas:

One of the primary effects of Meta’s open-source tools is the improved trustworthiness of generative AI models. By providing developers with comprehensive evaluation tools, such as CyberSecEval and Llama Guard, Meta enables them to identify and mitigate potential safety risks. This, in turn, increases the reliability and accountability of AI systems, fostering trust among users and stakeholders.

Meta’s tools, particularly CyberSecEval, play a crucial role in enhancing AI security and cybersecurity. By identifying inappropriate or harmful text and evaluating the potential for creating insecure code or aiding cyberattacks, developers can proactively address vulnerabilities in their AI models. This proactive approach contributes to a more secure AI ecosystem, protecting against potential threats and ensuring the responsible use of AI technologies.

Meta’s emphasis on collaborative efforts in ensuring AI safety has a ripple effect on the broader AI community. By involving AI developers, cloud services, semiconductor companies, and software firms, Meta creates a shared foundation for developing safer AI models. This collaborative approach fosters knowledge sharing, best practices, and collective responsibility, leading to a more comprehensive and effective approach to AI safety.

The availability of open-source tools for AI safety encourages developers to adopt responsible practices in the development and deployment of AI models. With the ability to evaluate the capabilities and potential risks of their AI systems, developers can make informed decisions and take necessary precautions. This promotes ethical considerations, transparency, and accountability in the AI industry, ensuring that AI technologies are developed and used responsibly.

Meta’s open-source tools also contribute to the advancement of AI research and innovation. By providing researchers with comprehensive evaluation tools, Meta enables them to push the boundaries of AI while ensuring safety and ethical considerations. This encourages further exploration and experimentation in the field, leading to new breakthroughs and advancements in AI technology.

Overall, the effect of Meta’s open-source tools for AI safety is a more trustworthy, secure, and responsible AI ecosystem. Through improved trustworthiness of generative AI models, enhanced AI security, a collaborative approach to AI safety, responsible development and deployment of AI, and the advancement of AI research and innovation, Meta’s initiative sets a positive trajectory for the future of AI technologies.

If you’re wondering where the article came from!

#